Update 2nd April 2025: Article now includes code for image generation and also cleaner approach for injecting iOS version of code using Koin.

The general availability of Vertex AI in Firebase was announced recently and in this article we’ll show how to use the associated Android and iOS SDKs in a Compose/Kotlin Multiplatform project. The code shown is included in the VertexAI-KMP-Sample repository.

Vertex AI in Firebase is now generally available.

— Firebase (@Firebase) October 21, 2024

You can confidently use our Kotlin, Swift, Web and Flutter SDKs to release your AI features into production, knowing they're backed by Google Cloud and Vertex AI quality standards.

Discover more ↓ https://t.co/SoOfAT9SOz

Setup

We initially performed the following steps

- Created a new Compose/Kotlin multiplatform project using the Kotlin Multiplatform Wizard (with “Share UI” option enabled).

- Created a new Firebase project and enabled use of Vertex AI for that project.

- Added Android and iOS apps in the Firebase console. The associated

google-services.jsonandGoogleService-Info.plistfiles were downloaded then and added to the Android and iOS projects.

Shared KMP code setup

We’re making use of the following libraries in shared code and made related changes shown below to the build config for the KMP module.

- Firebase (for the Vertex AI APIs)

- Kotlinx Serialization (for parsing Vertex json response)

- Markdown (for displaying Vertex markdown response)

- Coil (CMP library for rendering images)

- Koin (dependency injection along with support for KMP ViewModel)

libs.version.toml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

[versions]

...

firebaseBom = "33.12.0"

koin = "4.0.4"

kotlinx-serialization = "1.8.0"

markdownRenderer = "0.27.0"

coil = "3.1.0"

[libraries]

...

firebase-bom = { module = "com.google.firebase:firebase-bom", version.ref = "firebaseBom" }

firebase-vertexai = { module = "com.google.firebase:firebase-vertexai" }

coil-compose = { module = "io.coil-kt.coil3:coil-compose", version.ref = "coil" }

koin-core = { module = "io.insert-koin:koin-core", version.ref = "koin" }

koin-compose-viewmodel = { module = "io.insert-koin:koin-compose-viewmodel", version.ref = "koin" }

kotlinx-serialization = { group = "org.jetbrains.kotlinx", name = "kotlinx-serialization-core", version.ref = "kotlinx-serialization" }

kotlinx-serialization-json = { group = "org.jetbrains.kotlinx", name = "kotlinx-serialization-json", version.ref = "kotlinx-serialization" }

markdown-renderer = { module = "com.mikepenz:multiplatform-markdown-renderer-m3", version.ref = "markdownRenderer" }

[plugins]

...

googleServices = { id = "com.google.gms.google-services", version.ref = "googleServices" }

kotlinxSerialization = { id = "org.jetbrains.kotlin.plugin.serialization", version.ref = "kotlin" }

build.gradle.kts

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

plugins {

...

alias(libs.plugins.kotlinxSerialization)

alias(libs.plugins.googleServices)

}

androidMain.dependencies {

...

implementation(project.dependencies.platform(libs.firebase.bom))

implementation(libs.firebase.vertexai)

}

commonMain.dependencies {

...

implementation(libs.kotlinx.serialization)

implementation(libs.kotlinx.serialization.json)

implementation(libs.markdown.renderer)

implementation(libs.koin.core)

implementation(libs.koin.compose.viewmodel)

implementation(libs.coil.compose)

}

Using Vertex AI

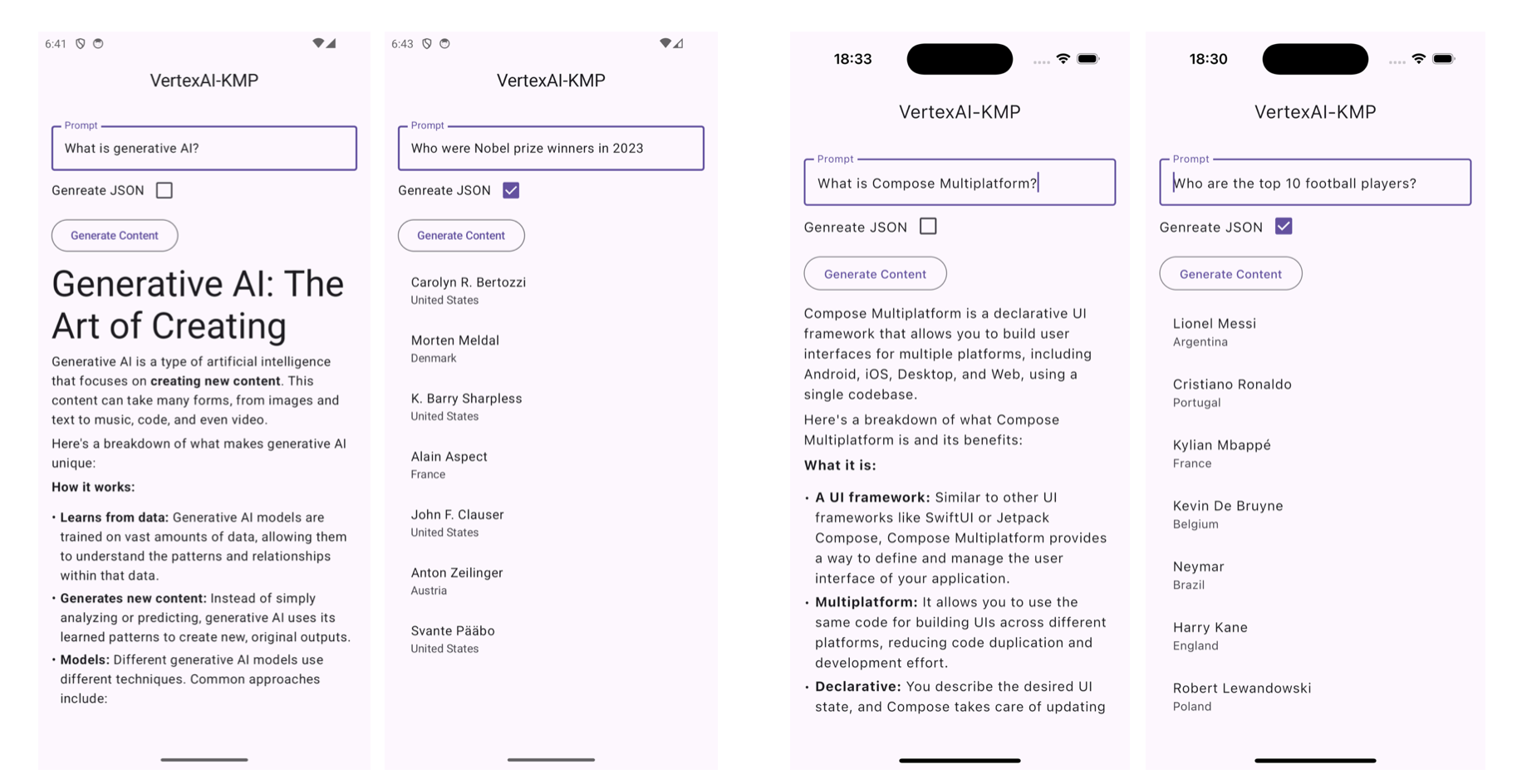

We’re making use of 3 Vertex AI features in this sample (illustrated in the screenshots below)

- text generation with markdown response (rendered using

MarkdownCMP library) - structured json generation (with custom rendering of the result)

- image generation (using Imagen 3)

To support Android and iOS specific implementations of this functionality we created the following interface in shared (commonMain code)

GenerativeModel.kt

1

2

3

4

5

interface GenerativeModel {

suspend fun generateTextContent(prompt: String): String?

suspend fun generateJsonContent(prompt: String): String?

suspend fun generateImage(prompt: String): ByteArray?

}

This is implemented on Android as follows (in androidMain source set in shared KMP module) where we make use of the Vertex AI Android SDK. We’re also defining the schema here that Vertex will use when generating the json response.

For this sample we’re using a specific schema that supports a range of prompts that return a list of people (for example as shown in screenshots above). The response is parsed using the Kotlinx Serializaton library and rendered as a simple list in our shared Compose Multiplatform UI code.

GenerativeModel.android.kt

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

class GenerativeModelAndroid: GenerativeModel {

private val jsonSchema = Schema.array(

Schema.obj(

mapOf(

"name" to Schema.string(),

"country" to Schema.string()

)

)

)

override suspend fun generateTextContent(prompt: String): String? {

val generativeModel = Firebase.vertexAI.generativeModel(

modelName = "gemini-1.5-flash"

)

return generativeModel.generateContent(prompt).text

}

override suspend fun generateJsonContent(prompt: String): String? {

val generativeModel = Firebase.vertexAI.generativeModel(

modelName = "gemini-1.5-flash",

generationConfig = generationConfig {

responseMimeType = "application/json"

responseSchema = jsonSchema

}

)

return generativeModel.generateContent(prompt).text

}

override suspend fun generateImage(prompt: String): ByteArray? {

val imageModel = Firebase.vertexAI.imagenModel(

modelName = "imagen-3.0-generate-002"

)

val imageResponse = imageModel.generateImages(prompt)

return if (imageResponse.images.isNotEmpty()) {

imageResponse.images.first().data

} else {

null

}

}

}

On iOS this is implemented in the following Swift code (using the Vertex AI iOS SDK in this case). We’re also defining the above mentioned schema here. A future enhancement would be to define this schema in some generic format in shared code and then translate that in the Android and iOS implementations.

GenerativeModelIOS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

class GenerativeModelIOS: ComposeApp.GenerativeModel {

static let shared = GenerativeModelIOS()

let vertex = VertexAI.vertexAI()

let jsonSchema = Schema.array(

items: .object(

properties: [

"name": .string(),

"country": .string()

]

)

)

func generateTextContent(prompt: String) async throws -> String? {

let model = vertex.generativeModel(

modelName: "gemini-1.5-flash"

)

return try await model.generateContent(prompt).text

}

func generateJsonContent(prompt: String) async throws -> String? {

let model = vertex.generativeModel(

modelName: "gemini-1.5-flash",

generationConfig: GenerationConfig(

responseMIMEType: "application/json",

responseSchema: jsonSchema

)

)

return try await model.generateContent(prompt).text

}

func generateImage(prompt: String) async throws -> KotlinByteArray? {

let model = vertex.imagenModel(modelName: "imagen-3.0-generate-002")

let response = try await model.generateImages(prompt: prompt)

guard let image = response.images.first else {

return nil

}

let imageData = image.data

return imageData.toByteArray()

}

}

That iOS implementation of GenerativeModel is passed down to shared code when initialising Koin. Note that this is where we’re initialsing Firebase on iOS as well.

1

2

3

4

5

6

7

8

9

10

11

12

13

@main

struct iOSApp: App {

init() {

FirebaseApp.configure()

initialiseKoin(generativeModel: GenerativeModelIOS.shared)

}

var body: some Scene {

WindowGroup {

ContentView()

}

}

}

That iOS specific instance of GenerativeModel is added to the Koin object graph and is what will be injected in to our shared view model when running on iOS.

Koin.ios.kt

1

2

3

4

5

6

7

8

fun initialiseKoin(generativeModel: GenerativeModel) {

startKoin {

modules(

commonModule,

module { single<GenerativeModel> { generativeModel } }

)

}

}

The Android version of Koin initialisation in turn adds GenerativeModelAndroid to the object graph.

Koin.android.kt

1

2

3

4

5

6

7

8

fun initialiseKoin() {

startKoin {

modules(

commonModule,

module { single<GenerativeModel> { GenerativeModelAndroid() } }

)

}

}

Android client setup

We initialise Firebase and Koin in our main Android application class.

build.gradle.kts

1

2

3

4

5

6

7

class VertexAIKMPApp : Application() {

override fun onCreate() {

super.onCreate()

FirebaseApp.initializeApp(this)

initialiseKoin()

}

}

Shared ViewModel

We invoke the above APIs in the following shared view model (we’re using the KMP Jetpack ViewModel library here). That includes the generateContent function which the Compose UI code calls with the text prompt and a flag indicating whether to generate a json response or not. If generateJson is set we also parse the response and return the structured data to the UI code which renders as a basic list (as shown in the screenshots above). We’re also invoking generateImage from here as well.`

GenerativeModelViewModel.kt

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

class GenerativeModelViewModel(private val generativeModel: GenerativeModel) : ViewModel() {

val uiState = MutableStateFlow<GenerativeModelUIState>(GenerativeModelUIState.Initial)

fun generateContent(prompt: String, generateJson: Boolean) {

uiState.value = GenerativeModelUIState.Loading

viewModelScope.launch {

try {

uiState.value = if (generateJson) {

val response = generativeModel.generateJsonContent(prompt)

if (response != null) {

val entities = Json.decodeFromString<List<Entity>>(response)

GenerativeModelUIState.Success(entityContent = entities)

} else {

GenerativeModelUIState.Error("Error generating content")

}

} else {

val response = generativeModel.generateTextContent(prompt)

GenerativeModelUIState.Success(textContent = response)

}

} catch (e: Exception) {

GenerativeModelUIState.Error(e.message ?: "Error generating content")

}

}

}

fun generateImage(prompt: String) {

uiState.value = GenerativeModelUIState.Loading

viewModelScope.launch {

uiState.value = try {

val imageData = generativeModel.generateImage(prompt)

GenerativeModelUIState.Success(imageData = imageData)

} catch (e: Exception) {

GenerativeModelUIState.Error(e.message ?: "Error generating content")

}

}

}

}

Shared Compose Multiplatform UI code

Finally, the following is taken from the shared Compose UI code that’s used to render the response.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

is GenerativeModelUIState.Success -> {

if (uiState.entityContent != null) {

LazyColumn {

items(uiState.entityContent) { item ->

ListItem(

headlineContent = { Text(item.name)},

supportingContent = { Text(item.country) }

)

}

}

} else if (uiState.textContent != null) {

Markdown(uiState.textContent)

} else if (uiState.imageData != null) {

AsyncImage(

model = ImageRequest

.Builder(LocalPlatformContext.current)

.data(uiState.imageData)

.build(),

contentDescription = prompt,

contentScale = ContentScale.Fit,

modifier = Modifier.fillMaxWidth()

)

}

}

Featured in Android Weekly #647 and Kotlin Weekly Issue #432

Related tweet

Using Vertex AI in a Compose/Kotlin Multiplatform project https://t.co/k6P1cO5zly

— John O'Reilly (@joreilly) October 27, 2024